News

News

~863 words | 5 420 characters | Reading time: 4 minutes

Is debriefing conducted by AI more effective?

Deception, or the deliberate misleading of participants, is used in psychological research. Although debriefing is conducted after the study, the effect of the prolonged impact of disinformation can ensure that false information continues to influence participants’ thinking. Iwona Dudek’s study examines whether talking to AI can improve the effectiveness of this procedure compared to traditional text.

INTRODUCTION | A good study in psychology simulates reality, with the goal of eliciting a natural reaction in the subject, so sometimes it is necessary to give false information, called deception. Deception is necessary especially in studies of memory distortions. In order to discover how false memories are formed it is necessary to give information, sometimes untrue information. In short, for the sake of science, the researcher must be lied to. In a good psychological experiment, at the end of the study, a so-called debriefing, also called de-briefing, is performed as a form of disenchantment. This is an explanation of the purpose of the study and also the reason why the subjects were misled. Unfortunately, contrary to expectations, debriefing is sometimes ineffective, and despite the debriefing, something called continued influence effects (CIE) of disinformation is observed. It turns out that disinformation continues to influence the inference process in the subject long after the study is over. The best example of CIE is the belief in the supposed link between autism and vaccines. The myth persists despite many efforts by scientists to debunk it.

🟦 In 1998, the February issue of the journal The Lancet published a short article by Andy Wakefield proving a link between the administration of the smallpox, rubella and mumps (MMR) vaccine and the diagnosis of neurodevelopmental problems. Twelve children between the ages of 3 and 10 were noted to have behavioral changes soon after the vaccine was administered, i.e. from 1 to 14 days (average 6.3 days). The article became the basis for parents’ concerns and abandonment of the vaccination calendar. Interestingly, the first articles showing no link between autism and vaccines were published soon after the publication in The Lancet. Then reports began to emerge about the lack of integrity of the study’s author – Wakefield was said to have deliberately selected children for the study and tampered with the data. A journalistic investigation revealed a conflict of interest. Wakefield had received financial benefits from parents convinced of the harmfulness of vaccines, who were preparing lawsuits against the vaccine pharmaceutical companies. All they lacked was scientific proof of the existence, i.e. a scientific article in a serious journal showing the presence of such a link. A scandal broke out, resulting in the 2010. The Lancet retracted the text. Unfortunately, despite the revelation of the falsification and manipulation of data, despite the information campaigns, the exhortations of the medical community to vaccinate not only children, but also themselves, the anti-vaccine movement is still going strong. Every day, many parents are faced with the decision whether to vaccinate their own child, and many of them make the decision.

What does debriefing look like in online research? Usually it is text displayed on a screen. Such a slide is easy to skip. “In practice, this can limit the ability to actively process the information received in debriefing,” – notes Iwona Dudek, M.A., a doctoral student at the Institute of Psychology at the Jagiellonian University and winner of a PSPS grant, “Large language models can therefore be a promising auxiliary tool in de-briefing procedures after exposure to false information. The project attempts to improve the de-blurring procedure by engaging participants in conversation with AI.”.

To test the effectiveness of AI-assisted debriefing, it must be shown that debriefing fully performed by chatGPT is more effective than standard debriefing.

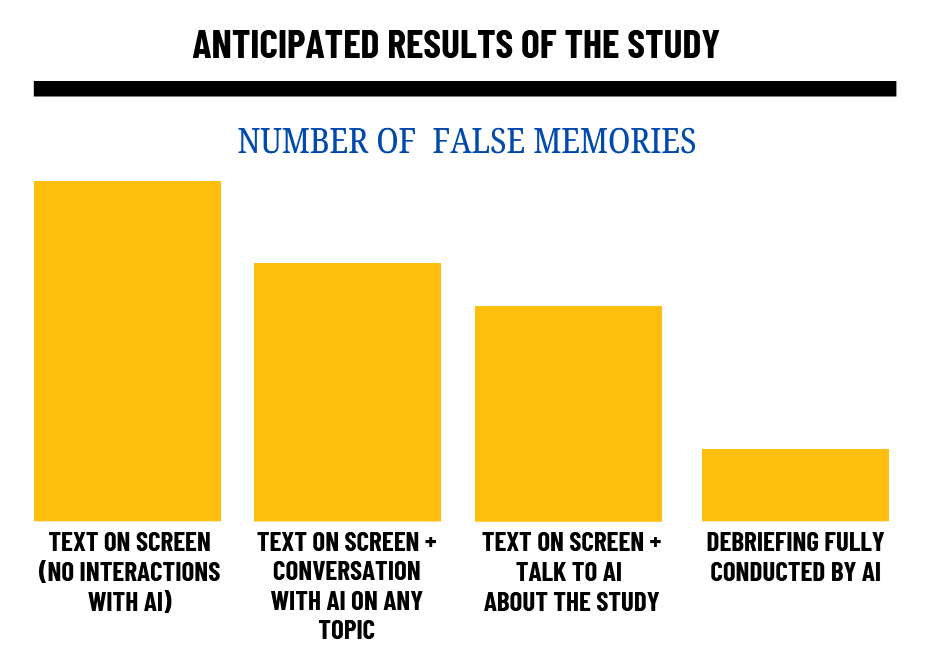

METODOLOGY | In the study, the independent variable is the four experimental conditions, i.e. the four groups to which – necessarily randomly! – test subjects are assigned. Each of these groups has a different type of debriefing.

1️⃣ The first group consists of subjects who are presented with a classic debriefing in the form of a text. These respondents do not participate in any conversation with the LLM.

2️⃣ The second group consists of people who are also presented with text on the screen, but are allowed to talk to the LLM with the topic of conversation not being the survey, but, for example, about the weather.

3️⃣ In the third condition, participants, as in the previous two conditions, are presented with a classic debriefing, but this time they can talk to the AI about the study. The AI has information about the study.

4️⃣ The fourth and most interesting condition is the people they talk to AI about the study. These individuals do not watch any text on the screen, and the debriefing is fully conducted by the AI.

HYPOTHESIS | In a second measurement, conducted a week later, participants who had conversations with AI about the study will report lower numbers of false memories and beliefs than participants who were only shown a slide, or who had a conversation with AI but about a topic unrelated to the study.

PARTICIPANTS | The participants of the survey are adults and the planned sample size: N = 180 people.

In this post I used:

- Grant submission by Iwona Dudek

- The illustration on the home page (i.e., the highlight image) was generated by artificial intelligence (Microsoft Copilot / DALLE).

Views: 3